But, novice Git users don’t know how to do this optimally, and “naive” approach leads to complexities. Git is a distributed version control system, which means that everyone can “commit” to their copies of repositories. Syncing these commits with upstream is a bit more difficult and leads to commit-conflicts sometimes. So, here’s aforementioned “naive” approach:

- Fork upstream github repository

- Clone forked repository to local machine

- Make changes

- Commit

- Push

- Send Pull Request

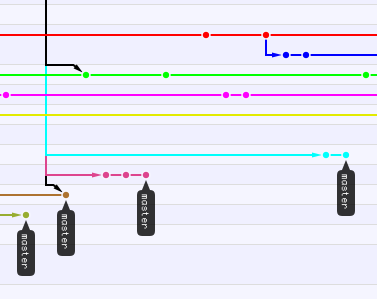

It will work, but, there’s one non-obvious thing: pull request, probably, won’t be merged immediately and there are high chances, that there will be some commits commits pushed to official repository before our commit is merged. As the result, we have a conflict between upstream repository and our forked repository. So, at this point, if we plan to use our forked repository again, we have to “merge” upstream changes. It’s not end of the world, and git, if we’re lucky enough, will do this merge automatically as part of ”git pull upstream”, but it won’t be “fast-forward” merge and github’s ”Network” diagram (or branches diagram in your favourite git GUI) won’t be nice and clean anymore.

There’s better approach, and it’s name is ”Feature Branches”. It’s quite simple. Once you have cloned forked repository (i.e. after steps 1-2), use ”git checkout -b new_branch_name” command (it’s nice, if “new_branch_name” summarises changes you’re going to implement). This command creates new branch, starting from current “master” and makes it active. Make changes and commit them: “master” branch is left intact and all the things you changed sit nicely in this new branch. Now, push these changes with ”git push origin new_branch_name” command. This will send your new branch to github. Now, open this branch on github and send Pull Request from your “new_branch_name” to upstream’s “master”, as usually.

Use ”git checkout master” to return to the “master” branch, and, whenever upstream merges your changes, and you pull those, they will appear here automatically, without a single “merge” effort from you. As a bonus, you get beautiful tree of branches without complex knots.